A common issue when implementing ITSM tools

Often, while working on projects to implement a new IT service management tool, I have encountered the following remarks from one or more IT engineers. “Why are we implementing that new tool? Our team already has tools to do that.” To do what? Well, to manage the investigation, diagnosis and resolution of incidents, problems, events and so forth. I call these tools technology management tools. These are the tools used, for example, to manage databases, computers, network devices or even applications.

From the perspective of these engineers, playing a second or third line or support role, use of the “service management” tool is redundant administration. It is extra work that does not help them to do their jobs. On the contrary, they often feel that such tools cause them to lose productivity and to be less effective and efficient in their work.

I am perfectly aware of the fact that many engineers have grown used to the horizontal service management tools that have been imposed on them and perform their jobs as they have been requested to do. It is rather like whiskey (or some other strong drink — I have no anti-Hibernian prejudice). When you first taste it, it is cringingly awful. But, in time, you get used to it and even proclaim its delights — until you get cirrhosis of the liver.

We do need ITSM tools

Service management professionals are nonetheless aware of the need to track progress in resolving incidents and other phenomena. We know that improvement depends on measuring what happens and that measurements are derived from the IT service management tools. In addition:

- the tools provide a record that can be reused to improve future resolutions;

- they support communications with users and customers;

- they help measure and ensure respect of agreed service levels;

- and so forth.

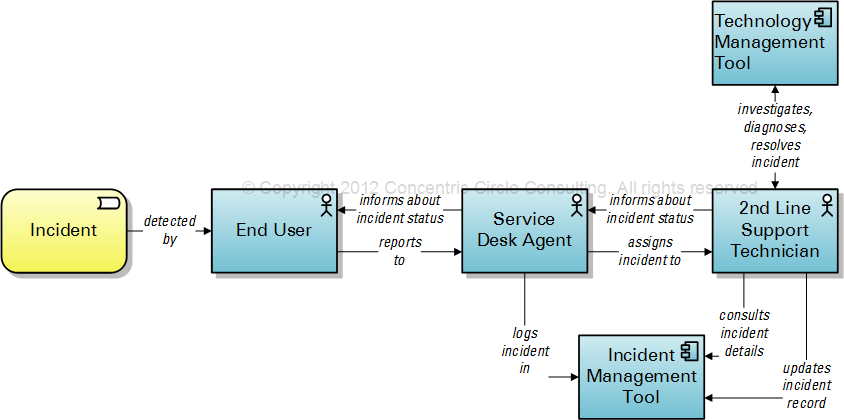

So, convinced by all these good reasons, we forge ahead with the implementation of our service management tools. But the voices of our very precious resources – our experienced and knowledgeable engineers – remain unheard or are soon forgotten. A diagram of the typical situation today is found below in Figure 1. Should we be surprised when tickets are not updated in a timely fashion or when the quality of the information in the tickets is mediocre, at best?

No easy solution with existing tools

The architecture of the technology and service management tools that exist in today’s marketplace hardly allow us to implement a better solution. The alternative to those products, namely, developing in-house a complete set of tools, is not feasible in most organizations. They lack the know-how and the resources, the desire to maintain such solutions, and assume that they will never develop a better solution than those provided by the specialist vendors.

A modest proposal…

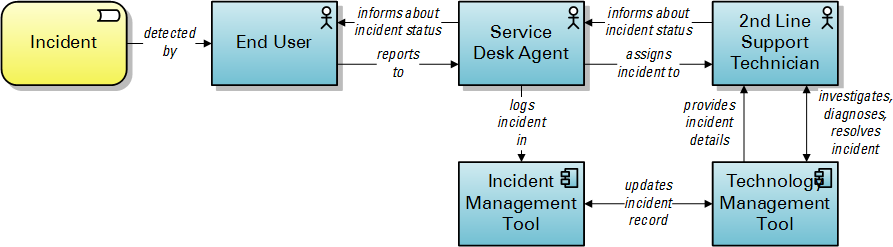

Whence comes my modest proposal. Instead of competing with existing technology management tools, our service management tools should be leveraging their capabilities. I foresee the following scenario, using the example of incident management (see Figure 2 below). What should happen when an incident is detected, be it by a user who communicates with the service desk, or by an event management or monitoring system, or by some other means? First, the incident is identified, logged and categorized using the incident management tool. However, if that incident needs to be functionally escalated, the details are communicated to the technology management tool used by the function to which the incident is assigned.

The team using that management tool learns of the incident via that same tool, not via email, not via the telephone and not via the service management tool for incidents. The team does its work of investigation, diagnosis and resolution using precisely that same technology management tool. The work done with that tool is recorded automatically and becomes the record of how the function has treated the incident. To the extent that any manually entered commentary is required, it is also done in that tool. The technology management tool communicates this same information to the incident management tool so that the service desk always has visibility on what is happening, without having to bother the 2nd or 3rd line of support. This communication is done in parallel and in the background. When the incident is finally resolved, it is updated first in the technology management tool. This tool then communicates that update automatically to the incident management tool. In short, the technical functions work with only one tool.

Often, the incident needs to be functionally escalated again. In that case, the existing data for the incident would be communicated to whatever technology management tool the new function is using. The same information would be copied to the incident management tool.

What happens if the incident is assigned to someone who does not have a particular tool to manage the relevant technology? In that case, the incident management tool may be used directly to record what is done, as well as any changes to the incident status. This same approach also allows for the gradual migration from an existing tool architecture to the architecture proposed here.

Barriers to adopting the new ITSM tool architecture

There are several barriers to the adoption of this architecture. First, it would be critical for the industry to agree on the protocols for communicating service management data. This is a very low barrier. In fact, such protocols have already been developed and are occasionally in use. Far more complicated protocols have been developed and implemented. Second, the tool publishers have to perceive some form of customer demand for this sort of architecture. Why has such a demand has not already arisen? Perhaps this is due to the fact that the selection of service management tools is driven by service management specialists. If it were driven by the knowledge workers who manage the technologies we use to deliver services, the demand might be more visible.

There is reason to maintain hope, however. Take the example of the most advanced tools in use for release and deployment management (and, in annex, configuration management). These tools are not being designed to meet the requirements of release managers who take their cue from such frameworks as ITIL. Rather, they are created to meet the needs of the agile software development community and the devops afficionados. The poor ITIL-based managers eat the dust of their developer colleagues. I believe that this tragedy is largely due to ITIL’s advice that tools must be designed to support processes. And so we get such tools while ignoring the broader architecture of the IT tool set.

There is yet a third barrier to adoption. This is the seeming fragility of depending on a wide array of different tools, as opposed to having a single tool used to support all process activities. It is true that the proposed architecture is technically more complex. However, it has a built-in redundancy that allows for recovery from any failure in any single tool in the value chain. In fact, the architecture allows for the automation of incident tracking that most organizations perform manually, if they perform it at all. In theory, when work on an incident does not seem to advance as per the agreed service levels, it is the role of someone at the service desk to follow up with the persons to whom tasks have been assigned. They need to determine why the task has not progressed as planned and get work back on track. This role still exists. What would happen in case of a technical failure in communicating incident details to another tool? In such as case, the service desk should be able to reactivate the interface to get the process moving again. If a tool is simply unavailable, the incident management tool can always act as an exceptional backup. Given this backup and redundancy, the proposed architecture is actually more robust, even though it might be technically more complex.

Why we should move to the new architecture

In spite of these barriers, the benefits of the new architecture are directly related to the goals and objectives of incident management:

- it is more automated, and therefore faster, encouraging quicker incident resolution

- it helps incident resolvers focus on the work of resolution, not on the administration of updating records

- it combines the advantages of a best of breed approach to tooling, on the one hand, and an integrated solution approach, on the other.

Conclusion

The examples provided above are all drawn from the management of incidents. This area is perhaps the most easily understood by a maximum of practitioners. The new architecture is nonetheless applicable in a wide variety of areas, including the management of events, problems, changes (to a certain extent), etc.

The implementation of any such change in the architecture of IT management tools is not likely to come from the tool vendors themselves. They would not take the risks of developing such technology without the perception of some demand coming from customers. Therefore, the next step should be a discussion and analysis with as wide an array as possible of IT service provider organizations. The purpose of this discussion is to refine our understanding of the underlying issues and to determine how the new architecture could help to resolve them, without creating insoluble issues on its own.

Dear Robert,

The solution you propose, I think, is very much like a “Service Management Service Bus”, or part of an Enterprise Service Bus. So there are (technology) solutions available to do this.

The big issue I see is with the requirement for all tools involved to understand their role in the entire environment. You basically want a Systems Management tool to support the creation of an incident, and be able to communicate relevant information back to the Service Management tool. But they don’t speak the same language, nor do they (typically) even support a common frame of reference.

So in the end, you’d have to modify the toolsets as to make them understand each other AND implement an ESB-like infrastructure, including all the challenges (because there is no effective, “works in the real world”, protocol readily available) of mapping and aligning.

So I think your premise needs all the support it can get:

– tools need to be used where they add value,

– multiple tools / tool sets / suppliers is not a bad thing as such,

– tools need to support the people in doing their work.

[I think this is true for process frameworks as well.]

But the approach is rather oversimplified.

Jos, I think you have understood the issues well and have summarized some of the principal challenges. I agree with you that my short presentation of the concept did not cover all issues and therefore appears to be oversimplified. The question is whether anyone is willing to contribute to developing the concept or willing to lead the way in implementing it.

It is a daunting task, and I’ve only seen abstract models (too abstract to implement), or too simplistic (like mostly aimed at ticket exchange enabling (basic info, no process integration).

Especially daunting, as it needs to unify a area that lacks proper common context. I’ve actually drafted/sketched a (technology driven) approach for the unification and normalization [so for both service management tools exchanging info and systems and service management systems] (method of mapping, management, dynamic routing) which could be useful.

I am currently trying to implement some of those ideas in an “under development” service support tool, where I need to solve very similar issues. I would like to share those ideas with you “in the near future”, to see if they make sense to you, and maybe use that as a starting point in convincing the world that a unified process doesn’t need a single or all-encompassing tool-set from a single vendor.