Certain users of artificial intelligence systems make the assumption that by reversing the use of a neural network—solving for the inputs rather than solving for outputs—they can use AI towards creative ends. In my view, this approach is a misrepresentation of what we understand by creativity. However, this technique is very useful in analyzing human biases and in understanding what humans think of as important, as the quintessence of things.

This article depends on my understanding of what creativity is, which I describe here. You may wish to look at that article before continuing.

Neural networks and deep learning

The AI technique used to “create” is conceptually simple at a high level. It is based on the deep learning technique of:

- Capturing a variety of inputs

- Processing those inputs via a multi-layered network of varying weights

- Proposing outputs of a certain probability

Written schematically, we can say:

o + error = i (processed by) n

where i represents the inputs, o the outputs and n the trained neural network. The output is often inexact or only probable, thus the error factor. Error may be measured with such metrics as accuracy, precision and recall.

In order to solve for i instead of o, we simply subtract o from both sides of the equation. We assume that the processing will not create a unique output and that there will be some error. Thus, the equation becomes:

error = i (processed by) n – o

We try to get that error as close to zero as is reasonable and useful.

To exemplify this we can look at examples where a neural network has been trained to recognize the species of various classes of animals using images of those species. Once trained, the system may generate images as outputs. For example, such a system might be able to draw pictures based on such input as “yellow-bellied sapsucker” or “chestnut-vented nuthatch”.

Of course, the system designer (not the AI) can further transform the images, such as rendering them as fractals or any of the standards transformations of images of which computing is capable. But these transformations are simply responding to a programmer’s commands and are not the result of an AI’s creativity. Thus, artists are now using reverse machine learning as tools for generating images.1 The AI used is merely a tool in the hands of the artist, a sort of highly elaborate paint brush. The ultimate work is the result of the artist’s subjective sensibilities.

You can see in this video a popularized explanation of this method:

Is this creativity?

Compared to such use of AI as an imaging tool, I think we usually consider creativity to be quite a different phenomenon. A creative artist, such as one of the early Cubists, could conceive of a new system for relating color and form, even if the image is figurative in nature and is supposed to represent something real. A choreographer, such as Ohad Naharin, might create a new grammar of human movement in dance. A creative poet uses language, not strictly according to the rules of common usage, but in an inventive way, combining words, symbols and sounds in a way hitherto not imagined. These creations are qualified as “elegant” or “beautiful”. We use the same terms to qualify creations in such abstract disciplines as mathematics or physics.

Can an artificial intelligence using the so-called “deep learning” approach output images and texts? Absolutely. Are they formally correct, following rules of grammar and syntax for the discipline concerned? For sure. Are they beautiful and do they stir the emotions? Well, maybe, but that is due as much to the beholder as to the creator. Can an artificial intelligence deliberately break the rules and nonetheless create something recognizable as coherent. Can it deliberately create a new canon of beauty? Can it invent a new game that anyone might want to play? I doubt that AI currently has any capability to do so.

As impressive as the demonstration above might be (given our current state of the technology), are we seeing a demonstration of creativity? I don’t think so.

Imagine an apprentice painter going to a museum, setting up easel in front of a painting and producing a copy. The result is very similar to what is happening with the artificial neural networks above. There is input (the original painting); a very complex network consisting of the apprentice’s eyes, brain, muscles, hands, palette and brushes; and an output (the copy). We judge the copy in terms of its differences from the original, the “errors” in the copy.

But there is a much more important reason for doubting the creativity of the neural network’s activity. This is the fact that the input is a pre-existing object, such as a picture of a bird, and that we judge the output based on its similarity to the input used for training the system in the first place. It is hard to imagine a more conservative, non-creative sort of activity. By the same criteria we would judge Copernicus’ planetary system as rubbish, given that it so poorly reproduces the Ptolemaic system.

Finding the quintessence

I quote the definitions of quintessence as drawn from the Merriam-Webster dictionary:

I submit that the deep learning method used by current artificial intelligences is a method for abstracting from a set of data the essence of one or more things. In some cases, we know in advance what we are looking for and depend on the AI to identify it for us. This is called supervised learning. An AI might perform extremely well in a classification task, such as deciding if a tumor is benign or malignant, but the classification taxonomy is first determined by humans, not by the AI.

How does an AI using machine learning decide that a given image depicts a certain species of bird? It is able, via the progressive processing of the positions, colors and relations among the image’s pixels, to suggest the best match between those pixels and a certain species. At the same time, it is able to reject certain attributes of the pixels as being irrelevant to the task, by weighting them as being of little importance to the task at hand. If there is a background of blue sky, it can decide that the sky’s pixels are not very significant to the analysis of the bird’s species (unless, perhaps, it helps distinguish nocturnal from diurnal species). There is no differential correlation between the blocks of blue pixels outside the shape of the bird and any particular species of bird. Of course, were those blue pixels within the shape of the bird, they might strongly correlate with certain species.

Similarly, if the beak is visible in the image, its shape will correlate with some birds more than others. And so on for the pixels of the tail, the legs and whatever else shows a strong correlation. It is not even necessary to first recognize components of the bird, such as “beak” or “tail”. The semantics of the blocks of pixels are useful to humans for explaining identification, but are not used by the AI.

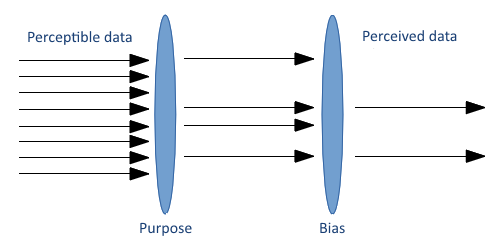

Such successful correlations are really identifying the quintessence of the image when viewed from the perspective of identifying the species of the central object in the image. The notion of quintessence (in the second of the definitions above) is relative to some purpose. The quintessence of a yellow-bellied sapsucker from the point of view of a human bird watcher is probably very different from its quintessence when viewed from the perspective of the bird looking for a suitable mate. The relativity of quintessence is important for our next point.

Showing human biases

The term “bias” is used in many different ways. In the context of statistical analysis, bias may refer to the difference between the observed data and the model or hypothesis used to analyze that data. In neural networks, bias nodes may be added to the layers that are independent of the initial input. I am not using the term in those senses. Rather, I am referring especially to the cognitive and social biases that filter or transform the inputs into the human mind.

Suppose we have trained an artificial intelligence to identify species of birds based on pictures of them. Then we reverse the procedure, as described above, and ask the AI to draw a picture of a certain species of bird. Instead of getting a realistic picture of a particular bird, we get instead a caricature of the bird.

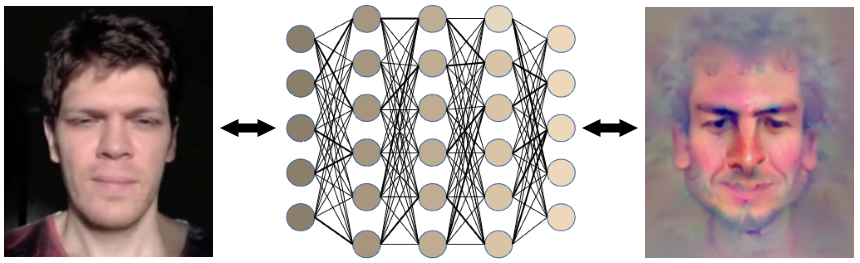

The same is true when we ask an AI to recognize a human based on her or his face and then draw a picture derived from what was recognized. Instead of getting back a realistic picture, we get a caricature of the person. The overall impression given by the caricature is generally correct. However, the particular elements of the image that were useful for identifying the individual are exaggerated.

Bias also occurs in the choice of the training data used for machine learning. If you train an AI to recognize images of musical instruments, you will get different results depending on whether the musicians are included or not in the training images. An AI trained to recognize a human language, such as English, will be subject to the biases of the trainer, who must decide which texts are indeed examples of the English language.

Furthermore, bias is inherent in the decision of whether a hypothesis used to model the training data is good enough for a given purpose. In other words, how tolerant should the system be of false positives and negatives? This depends largely on the purpose of the system, its cost and the level of performance required. The tolerance in an AI used by a service desk to support customers is likely to be much greater than the tolerance in a system used to diagnosis life-threatening diseases.

It’s important to remember that the correlations made by an AI between the input and what it has been trained to do are not intrinsic to the things being correlated. They correlate only because the analyst considers the attributes being measured as important. Let’s return to the example of bird recognition. For the bird watcher who wants to record sightings of as many different species as possible in her notebook, the important correlations concern the things that allow the bird watcher to make identifications—shape, size, color, song, habitat and so forth. But for the bird looking for a mate, other correlations might be more important—pheromones, mating dance movements, etc. If the detectable attributes are not relevant to your purpose, they are like the patches of blue sky behind the bird in the picture. Although they might be detected, they are weighted as having little importance.

So, we sense a lot of data about our environment and about the objects found around us, but we choose to evaluate and correlate only some of that data, based on our purposes (whether conscious or not). What we pay attention to reflects the biases of what we consider to be important. How is this related to using machine learning in reverse?

Let’s suppose you have trained an AI to recognize the bird species based on their pictures. The AI becomes very proficient at making these identifications. Next, we ask the AI to draw a picture of a bird. What can we expect to see in that picture? Will it be an example of a certain species of bird, say, an owl or a vulture? Is there one species that is the quintessential bird?

Or will it be some generic form, such as the neotenic image a child might draw.

In one case, the AI shows a particular species of bird viewed from a variety of angles. This makes sense, given that the AI needs to be able to recognize images of birds from many different angles. I note that there are no views from above a bird, a view that would be of little use to a typical human viewer.

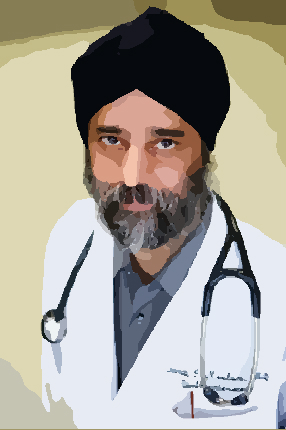

Let’s suppose you have trained an AI to recognize the jobs of people based on their pictures. The AI becomes very proficient at making these identifications. Next, we ask the AI to draw a picture of someone, only based on the job. What will it depict if asked to draw a picture of a medical doctor? Will it be wearing some uniform? What age might it appear to be? What hue will the skin have? Will the figure appear androgynous or will it have a recognizable gender?

The way the AI draws the picture will reflect whatever biases might be inherent in either the data used to train the AI, or in the hypotheses used to model and weight the data, or in the thresholds defined that makes a given output suggestible.

For example, suppose our AI draws a medical doctor as a South Asian male wearing a Sikh turban. We should ask ourselves whether the AI is presenting a purely random answer to the question, “What does a medical doctor look like?” or if the AI has concluded that a medical doctor is more likely to look like this than like some other depiction. As far as I know, AIs can draw pictures that show multiple views of the same image (see Fig. 10). It could show multiple views of the same Sikh physician. It could even show a pastiche of a wide variety of images of different people, all of whom are recognizable as physicians. So the filtering that we saw above in Fig. 5 also operates in reverse, when an AI is asked to draw a picture. The purposes and biases of the person creating the system that outputs images, as well as the purposes and biases that led to the structure and weighting of the AI’s neural network, will influence what the output looks like.

A good example of bias in an AI is the manifestation of pareidolia in an output image. In this case, the input is a picture of the sky, with a variety of cumulus clouds—a classic setting for pareidolia in humans. Surprise! The AI, too, sees images in the clouds (see Fig. 13).

The analysis of bias in AI and machine learning is an increasingly commonly discussed. For further information, here are some pertinent articles:

- WTF is the Bias-Variance Tradeoff?

- Algorithm Watch

- Algorithmic Justice League

- Big Data will be Biased if we Let It

- Bias in BigData/AI and ML

In summary, an AI is not more creative than a paint brush or a monkey with a typewriter. The learning mechanisms reflect the biases of the people defining the purpose of the AI and how it is trained. As a result, when an AI is reversed, it reveals the quintessence of the things being classified or analyzed by the system.

![]() The article Creativity, Quintessence and AI by Robert S. Falkowitz, including all its contents, is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

The article Creativity, Quintessence and AI by Robert S. Falkowitz, including all its contents, is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

Notes:

1 See, for example, the work of Mike Tyka at https://www.youtube.com/watch?v=0qVOUD76JOg. See also https://mtyka.github.io/machine/learning/2017/08/09/highres-gan-faces-followup.html for a discussion of his method and https://mtyka.github.io/machine/learning/2017/06/06/highres-gan-faces.html for examples of work in progress.

Fig. 1: By Jebulon — Personal work, CC0, https://commons.wikimedia.org/w/index.php?curid=45912325

Fig. 2: By Michelangelo – Public Domain

Fig. 3: Images of the face from https://www.youtube.com/watch?v=uSUOdu_5MPc

Dear Mr. Falkowitz,

I scrolled across your article and it was great reading it. I know a bit from computers and often people think of them as magic machines. But it is in fact processing “1”-s and “0”=s. Certainly not the way that human brain works, And this is the enigma. I personally suffer from bipolar depression and take medicine. It is said to me that the cause is disfunction of the hormone Seratonin which is called the hormone of happiness. I have talked with engineers and psyhiactrres and the later don’t have a clue how a machine works. They do not know how the electro chemistry in the brain works too. The medicine that I receive is based on empiric knowlidge, not technicly prooven. With a few words said, my sickness is sometimes that I feel too happy with no evident cause or too blue, again with no particular reason. Even the best computing machines that have self-diagnostic tools are merely doing math algoritams. The humans learn at best by immitating something, like learning to ride a byke, skiing, immitating math equotions, etc. I don’t think that AI would learn to immitate for quite a while, not in our lifecycle. Einstein beleived in God. Tesla beleived in God too, and that is the best tool to keep sain. When we don’t understand somthing we say – that;s Gods will. And yet, theres a lot that we do not understand!